Trump Android words

This is the basis for a live coding exercise.

Inspired by David Robinson’s excellent blog post: Text analysis of Trump’s tweets confirms he writes only the (angrier) Android half.

Load some tweets from the official Donald Trump account.

library(purrr)

suppressMessages(library(dplyr))

library(tibble)

#load(url("http://varianceexplained.org/files/trump_tweets_df.rda"))

load("trump_tweets_df.rda")

#glimpse(trump_tweets_df)

tweets <- trump_tweets_df$text

tweets %>% head() %>% strtrim(70)

#> [1] "My economic policy speech will be carried live at 12:15 P.M. Enjoy!"

#> [2] "Join me in Fayetteville, North Carolina tomorrow evening at 6pm. Ticke"

#> [3] "#ICYMI: \"Will Media Apologize to Trump?\" https://t.co/ia7rKBmioA"

#> [4] "Michael Morell, the lightweight former Acting Director of C.I.A., and "

#> [5] "The media is going crazy. They totally distort so many things on purpo"

#> [6] "I see where Mayor Stephanie Rawlings-Blake of Baltimore is pushing Cro"Trump Android words

Here are some words that were shown to be associated with Trump tweets from an Android device. Smush them together into a regular expression – we’ll use it soon!

regex <- "badly|crazy|weak|spent|strong|dumb|joke|guns|funny|dead"Our mission: extract these Trump Android words from the tweets we’ve stored. While getting lots of purrr practice.

A small mercy from the future: we will learn just as much (or more) if we scale down this problem. Allow me to preselect rows that have all the complexity we need.

tweets <- tweets[c(1, 2, 5, 6, 198, 347, 919)]

tweets %>% strtrim(70)

#> [1] "My economic policy speech will be carried live at 12:15 P.M. Enjoy!"

#> [2] "Join me in Fayetteville, North Carolina tomorrow evening at 6pm. Ticke"

#> [3] "The media is going crazy. They totally distort so many things on purpo"

#> [4] "I see where Mayor Stephanie Rawlings-Blake of Baltimore is pushing Cro"

#> [5] "Bernie Sanders started off strong, but with the selection of Kaine for"

#> [6] "Crooked Hillary Clinton is unfit to serve as President of the U.S. Her"

#> [7] "The Cruz-Kasich pact is under great strain. This joke of a deal is fal"FYI I preselected tweets with 0, 1, 2, and 3 occurrences of Trump Android words.

Are you ready for gregexpr()?

No, you are not.

Use the base function gregexpr() to locate all the Trump Android words inside the tweets. I chose gregexpr() for the glorious opportunity to deal with an Awkward List.

matches <- gregexpr(regex, tweets)

str(matches)

#> List of 7

#> $ : int -1

#> ..- attr(*, "match.length")= int -1

#> ..- attr(*, "index.type")= chr "chars"

#> ..- attr(*, "useBytes")= logi TRUE

#> $ : int -1

#> ..- attr(*, "match.length")= int -1

#> ..- attr(*, "index.type")= chr "chars"

#> ..- attr(*, "useBytes")= logi TRUE

#> $ : int 20

#> ..- attr(*, "match.length")= int 5

#> ..- attr(*, "index.type")= chr "chars"

#> ..- attr(*, "useBytes")= logi TRUE

#> $ : int 134

#> ..- attr(*, "match.length")= int 4

#> ..- attr(*, "index.type")= chr "chars"

#> ..- attr(*, "useBytes")= logi TRUE

#> $ : int [1:2] 28 95

#> ..- attr(*, "match.length")= int [1:2] 6 4

#> ..- attr(*, "index.type")= chr "chars"

#> ..- attr(*, "useBytes")= logi TRUE

#> $ : int [1:2] 87 114

#> ..- attr(*, "match.length")= int [1:2] 4 6

#> ..- attr(*, "index.type")= chr "chars"

#> ..- attr(*, "useBytes")= logi TRUE

#> $ : int [1:3] 50 112 123

#> ..- attr(*, "match.length")= int [1:3] 4 4 4

#> ..- attr(*, "index.type")= chr "chars"

#> ..- attr(*, "useBytes")= logi TRUE

matches[[7]]

#> [1] 50 112 123

#> attr(,"match.length")

#> [1] 4 4 4

#> attr(,"index.type")

#> [1] "chars"

#> attr(,"useBytes")

#> [1] TRUEWhat is matches?!?

- A list. One element per element of

tweets. - Each element is an integer vector.

- It’s

-1if no matches found. - Holds the position(s) of the first character of each match, otherwise.

- It’s

- Each element has two attributes. Consider

match.length. Let us not speak of the other one.- It’s

-1if no matches found. - Holds the length(s) of each match, otherwise.

- It’s

We can clearly extract the matched words with this information. But it’s going to hurt.

Preview of substring() target function

Our eventual target function is substring(). Read the help on it now! Here are the highlights:

USAGE:

substring(text, first, last)

ARGUMENTS:

text = character

first = integer, position where substring to extract starts

last = integer, position where substring to extract stopsImagine each tweet playing the role of text.

The elements of matches are awfully close to what we need for first.

But we don’t have anything to use for last yet.

This is going to be Job #1.

Job #2 is to insert substring() and tweets + matches + result of Job #1 into the purrr::map() machinery.

Here’s where we are heading:

Get you know your Awkward List

How long are the elements of matches?

lengths(matches) # just happens to exist for length

#> [1] 1 1 1 1 2 2 3

sapply(matches, length) # NSFP = not safe for programming

#> [1] 1 1 1 1 2 2 3

vapply(matches, length, integer(1)) # preferred base approach

#> [1] 1 1 1 1 2 2 3

map_int(matches, length) # purrr way

#> [1] 1 1 1 1 2 2 3Exercise: Get a list of the match lengths.

Each element of matches carries this information in an attribute named match.length(). Store this info in a list called match_length.

- Pick one nontrivial example, e.g.

m <- matches[[7]]. - Get the attribute named

match.length. Hint:attr(). - Drop that approach into

purrr::map()to scale up to the fullmatcheslist.

Here’s how to do that for the last element of matches:

m <- matches[[7]]

attr(m, which = "match.length")

#> [1] 4 4 4Different ways to apply this logic to the entire matches list.

1 Pre-defined custom function. Conceptually simplest? Most verbose.

ml <- function(x) attr(x, which = "match.length")

map(matches, ml)

#> [[1]]

#> [1] -1

#>

#> [[2]]

#> [1] -1

#>

#> [[3]]

#> [1] 5

#>

#> [[4]]

#> [1] 4

#>

#> [[5]]

#> [1] 6 4

#>

#> [[6]]

#> [1] 4 6

#>

#> [[7]]

#> [1] 4 4 42 Anonymous function. More abstract? Very compact.

map(matches, ~ attr(.x, which = "match.length"))

#> [[1]]

#> [1] -1

#>

#> [[2]]

#> [1] -1

#>

#> [[3]]

#> [1] 5

#>

#> [[4]]

#> [1] 4

#>

#> [[5]]

#> [1] 6 4

#>

#> [[6]]

#> [1] 4 6

#>

#> [[7]]

#> [1] 4 4 43 Pre-existing function, additional arguments passed via ....

(match_length <- map(matches, attr, which = "match.length"))

#> [[1]]

#> [1] -1

#>

#> [[2]]

#> [1] -1

#>

#> [[3]]

#> [1] 5

#>

#> [[4]]

#> [1] 4

#>

#> [[5]]

#> [1] 6 4

#>

#> [[6]]

#> [1] 4 6

#>

#> [[7]]

#> [1] 4 4 4It’s good to know about all 3 approaches.

Exercise: Count the number of Trump Android words in each tweet.

Let’s compute how many Trump Android words appear in each tweet.

This isn’t quite lengths(matches), though, is it? Think about those -1s. Sad.

- Pick two examples at the extremes: a tweet with 0 Trump words and another with 3.

- Write some code that takes the associated element of

matchesand returns 0 or 3, as appropriate. - Use one of the approaches above to insert this into

purrr::map()and apply tomatches.

Code that works for both extreme examples:

m <- matches[[1]]

sum(m > 0)

#> [1] 0

m <- matches[[7]]

sum(m > 0)

#> [1] 3Insert into the machinery:

f <- function(x) sum(x > 0)

map(matches, f)

#> [[1]]

#> [1] 0

#>

#> [[2]]

#> [1] 0

#>

#> [[3]]

#> [1] 1

#>

#> [[4]]

#> [1] 1

#>

#> [[5]]

#> [1] 2

#>

#> [[6]]

#> [1] 2

#>

#> [[7]]

#> [1] 3

map(matches, ~ sum(.x > 0))

#> [[1]]

#> [1] 0

#>

#> [[2]]

#> [1] 0

#>

#> [[3]]

#> [1] 1

#>

#> [[4]]

#> [1] 1

#>

#> [[5]]

#> [1] 2

#>

#> [[6]]

#> [1] 2

#>

#> [[7]]

#> [1] 3Note that only 2 of the 3 approaches are workable here. That’s why it’s nice to know all of them.

What is the resulting object?

What would be a simpler form of the same info?

Read the help on map_int() and its other type-specific friends.

Tweak your existing approach to return an integer vector, with length equal to the number of tweets.

map_int(matches, ~ sum(.x > 0))

#> [1] 0 0 1 1 2 2 3Confirm that this is, indeed, different from just taking the lengths of the elements of matches:

tibble(

naive_length = lengths(matches),

n_words = map_int(matches, ~ sum(.x > 0))

)

#> # A tibble: 7 x 2

#> naive_length n_words

#> <int> <int>

#> 1 1 0

#> 2 1 0

#> 3 1 1

#> 4 1 1

#> 5 2 2

#> 6 2 2

#> 7 3 3Strip the attributes from matches

Exercise!

We have safely stored the match lengths in match_length.

Let’s create an almost-copy of matches and call it match_first. How will it differ? Remove the attributes from the elements of matches, so there’s less clutter when we print.

Hint: as.vector() will strip attributes.

(match_first <- map(matches, as.vector))

#> [[1]]

#> [1] -1

#>

#> [[2]]

#> [1] -1

#>

#> [[3]]

#> [1] 20

#>

#> [[4]]

#> [1] 134

#>

#> [[5]]

#> [1] 28 95

#>

#> [[6]]

#> [1] 87 114

#>

#> [[7]]

#> [1] 50 112 123Assess progress in a small example

Use the R objects on hand to achieve our goal in a small example: extract Trump words from single tweet. Work on tweets #1 and #7 because they represent the two extremes, 0 and 3 words respectively. If you can handle them, you’re in good shape.

Relevant R objects:

tweets %>% strtrim(70)

#> [1] "My economic policy speech will be carried live at 12:15 P.M. Enjoy!"

#> [2] "Join me in Fayetteville, North Carolina tomorrow evening at 6pm. Ticke"

#> [3] "The media is going crazy. They totally distort so many things on purpo"

#> [4] "I see where Mayor Stephanie Rawlings-Blake of Baltimore is pushing Cro"

#> [5] "Bernie Sanders started off strong, but with the selection of Kaine for"

#> [6] "Crooked Hillary Clinton is unfit to serve as President of the U.S. Her"

#> [7] "The Cruz-Kasich pact is under great strain. This joke of a deal is fal"

match_first

#> [[1]]

#> [1] -1

#>

#> [[2]]

#> [1] -1

#>

#> [[3]]

#> [1] 20

#>

#> [[4]]

#> [1] 134

#>

#> [[5]]

#> [1] 28 95

#>

#> [[6]]

#> [1] 87 114

#>

#> [[7]]

#> [1] 50 112 123

match_length

#> [[1]]

#> [1] -1

#>

#> [[2]]

#> [1] -1

#>

#> [[3]]

#> [1] 5

#>

#> [[4]]

#> [1] 4

#>

#> [[5]]

#> [1] 6 4

#>

#> [[6]]

#> [1] 4 6

#>

#> [[7]]

#> [1] 4 4 4Start with tweet #7, with 3 Trump words.

(tweet <- tweets[7])

#> [1] "The Cruz-Kasich pact is under great strain. This joke of a deal is falling apart, not being honored and almost dead. Very dumb!"

(t_first <- match_first[[7]])

#> [1] 50 112 123

(t_length <- match_length[[7]])

#> [1] 4 4 4

(t_last <- t_first + t_length - 1)

#> [1] 53 115 126

substring(tweet, t_first, t_last)

#> [1] "joke" "dead" "dumb"How well does this code work for tweet #1, with 0 Trump words?

(tweet <- tweets[1])

#> [1] "My economic policy speech will be carried live at 12:15 P.M. Enjoy!"

(t_first <- match_first[[1]])

#> [1] -1

(t_length <- match_length[[1]])

#> [1] -1

(t_last <- t_first + t_length - 1)

#> [1] -3

substring(tweet, t_first, t_last)

#> [1] ""There is some nonsense along the way and we get an empty string. I’d rather get character(0) or NA_character_ back, but I can live with this.

But we’re in good shape. We just need to compute where the matches end for all matches.

Store where Trump words end

Make a list that holds where the Trump words end. Call it match_last.

Pseudo-code for how we did this for a single tweet:

first <- an element of matches

length <- an element of match_length

last <- first + length - 1This is another map()-type problem, but instead of mapping over one list, we need to map over 2 lists in parallel.

Read the help for purrr::map2()!

Here’s the usage:

map2(.x, .y, .f, ...)Match this up to our task:

- Input 1 =

.xwill befirst - Input 2 =

.ywill belength - Function

.fwill be something that doesfirst + length - 1. Either a custom pre-defined function or an anonymous function. Your call.

You do it!

(match_last <- map2(match_first, match_length, ~ .x + .y - 1))

#> [[1]]

#> [1] -3

#>

#> [[2]]

#> [1] -3

#>

#> [[3]]

#> [1] 24

#>

#> [[4]]

#> [1] 137

#>

#> [[5]]

#> [1] 33 98

#>

#> [[6]]

#> [1] 90 119

#>

#> [[7]]

#> [1] 53 115 126Extract the Trump words

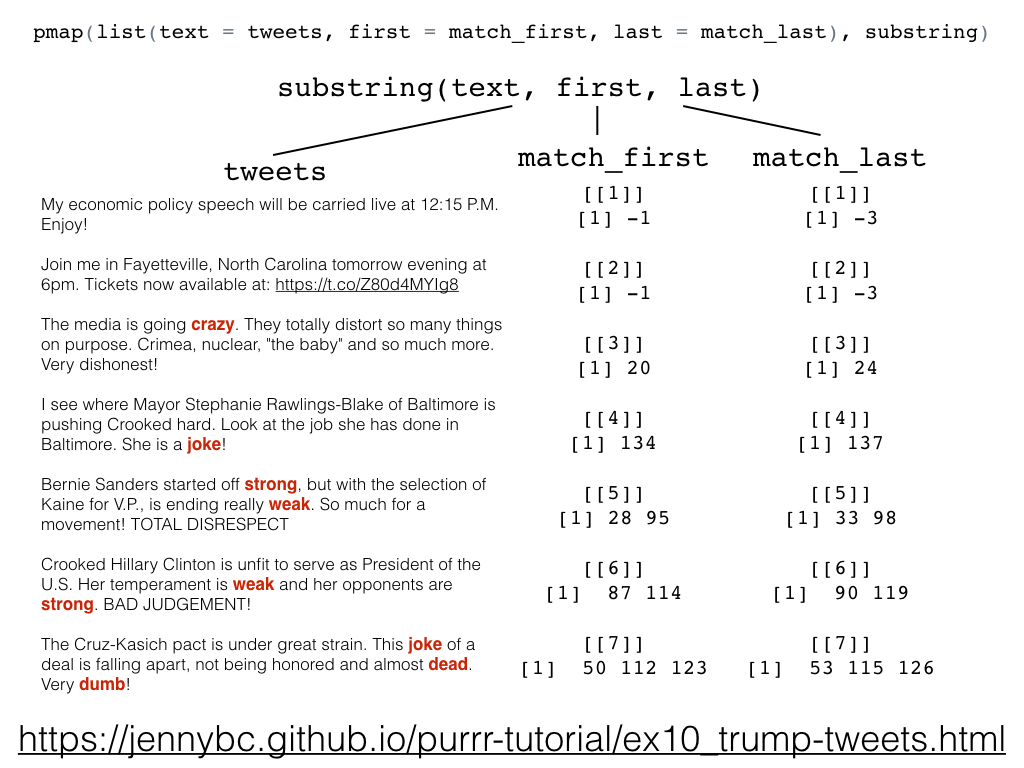

We’re ready to put everything together.

Pseudo-code for how we do this for a single tweet:

text <- an element of tweets

first <- an element of match_first

last <- an element of match_last

substring(text, first, last)This is another map()-type problem, but instead of mapping over one list, we need to map over 3 lists in parallel.

There is no map3(). This calls for pmap(). Read the help on it!

Here’s the usage:

pmap(.l, .f, ...)How does this relate to our task?

.lis a list of lists = the 3 lists we need to work through in parallellist(text = tweets, first = match_first, last = match_last)- I deliberately choose the list names to match the argument names of

substring(). Why confuse yourself? Why rely on position when you don’t have to?

- Function

.fwill besubstring().

You do it!

pmap(list(text = tweets, first = match_first, last = match_last), substring)

#> [[1]]

#> [1] ""

#>

#> [[2]]

#> [1] ""

#>

#> [[3]]

#> [1] "crazy"

#>

#> [[4]]

#> [1] "joke"

#>

#> [[5]]

#> [1] "strong" "weak"

#>

#> [[6]]

#> [1] "weak" "strong"

#>

#> [[7]]

#> [1] "joke" "dead" "dumb"We did it!

March through the rows in a data frame

Remember that a data frame is, in fact, a list of equal-length vectors. Just like the .l argument of pmap(). So often it’s nice to work your problems in the context of a data frame, instead of using free-floating vectors or lists. Why?

- It’s safer. This makes it very hard for you to subset or reorder one of the pieces and forget to do the same to the others.

- It’s tidier. Your project is contained in one neat object. You can print it,

View()it,str(), etc. to get a sense of how things stand. This is more annoying if stuff is lying around in separate objects, so you’re less likely to catch problems quickly.

How would we do that post hoc here?

mdf <- tibble(

text = tweets,

first = match_first,

last = match_last

)

pmap(mdf, substring)

#> [[1]]

#> [1] ""

#>

#> [[2]]

#> [1] ""

#>

#> [[3]]

#> [1] "crazy"

#>

#> [[4]]

#> [1] "joke"

#>

#> [[5]]

#> [1] "strong" "weak"

#>

#> [[6]]

#> [1] "weak" "strong"

#>

#> [[7]]

#> [1] "joke" "dead" "dumb"What if we take it all from the top, using a data frame approach and being as concise as possible?

tibble(text = tweets,

first = gregexpr(regex, tweets)) %>%

mutate(match_length = map(first, ~ attr(.x, which = "match.length")),

last = map2(first, match_length, ~ .x + .y - 1)) %>%

select(-match_length) %>%

pmap(substring)

#> [[1]]

#> [1] ""

#>

#> [[2]]

#> [1] ""

#>

#> [[3]]

#> [1] "crazy"

#>

#> [[4]]

#> [1] "joke"

#>

#> [[5]]

#> [1] "strong" "weak"

#>

#> [[6]]

#> [1] "weak" "strong"

#>

#> [[7]]

#> [1] "joke" "dead" "dumb"Yes, it all boils down to this.

Appendix

If you just wanted to solve this problem, you’d post-process the output of gregexpr() with regmatches().

regmatches(tweets, gregexpr(regex, tweets))Or you’d use the stringr or stringi packages to avoid gregexpr() altogether.

Have a look at regmatches() source and compare to ours. Note that, by necessity, there has to be more error checking and consideration for encoding and locale. So it’s not directly comparable. But you’ll see plenty of calls to the base equivalent of map(), Map(), and the same functions we’re using, i.e., attr() and substring().

regmatches

#> function (x, m, invert = FALSE)

#> {

#> if (length(x) != length(m))

#> stop(gettextf("%s and %s must have the same length",

#> sQuote("x"), sQuote("m")), domain = NA)

#> ili <- is.list(m)

#> itype <- "chars"

#> useBytes <- if (ili)

#> any(unlist(lapply(m, attr, "index.type")) == "bytes")

#> else any(attr(m, "index.type") == "bytes")

#> if (useBytes) {

#> itype <- Encoding(x) <- "bytes"

#> }

#> if (!ili && isFALSE(invert)) {

#> so <- m[ind <- (!is.na(m) & (m > -1L))]

#> eo <- so + attr(m, "match.length")[ind] - 1L

#> return(substring(x[ind], so, eo))

#> }

#> y <- if (is.na(invert)) {

#> Map(function(u, so, ml) {

#> if ((n <- length(so)) == 1L) {

#> if (is.na(so))

#> return(NA_character_)

#> else if (so == -1L)

#> return(u)

#> }

#> eo <- so + ml - 1L

#> if (n > 1L) {

#> if (any(eo[-n] >= so[-1L]))

#> stop(gettextf("need non-overlapping matches for %s",

#> sQuote("invert = NA")), domain = NA)

#> }

#> beg <- c(1L, c(rbind(so, eo + 1L)))

#> end <- c(c(rbind(so - 1L, eo)), nchar(u, itype))

#> substring(u, beg, end)

#> }, x, m, if (ili)

#> lapply(m, attr, "match.length")

#> else attr(m, "match.length"), USE.NAMES = FALSE)

#> }

#> else if (invert) {

#> Map(function(u, so, ml) {

#> if ((n <- length(so)) == 1L) {

#> if (is.na(so))

#> return(NA_character_)

#> else if (so == -1L)

#> return(u)

#> }

#> beg <- if (n > 1L) {

#> eo <- so + ml - 1L

#> if (any(eo[-n] >= so[-1L]))

#> stop(gettextf("need non-overlapping matches for %s",

#> sQuote("invert = TRUE")), domain = NA)

#> c(1L, eo + 1L)

#> }

#> else {

#> c(1L, so + ml)

#> }

#> end <- c(so - 1L, nchar(u, itype))

#> substring(u, beg, end)

#> }, x, m, if (ili)

#> lapply(m, attr, "match.length")

#> else attr(m, "match.length"), USE.NAMES = FALSE)

#> }

#> else {

#> Map(function(u, so, ml) {

#> if (length(so) == 1L) {

#> if (is.na(so) || (so == -1L))

#> return(character())

#> }

#> substring(u, so, so + ml - 1L)

#> }, x, m, lapply(m, attr, "match.length"), USE.NAMES = FALSE)

#> }

#> names(y) <- names(x)

#> y

#> }

#> <bytecode: 0x7f8977f3a3b8>

#> <environment: namespace:base>